I feel like talking about some pure math, just for fun on a Sunday afternooon.

Back in 2006, Dan Christensen did something rather simple and got a surprisingly complex and interesting result. He took a whole bunch of polynomials with integer coefficients and drew their roots as points on the complex plane. The patterns were astounding!

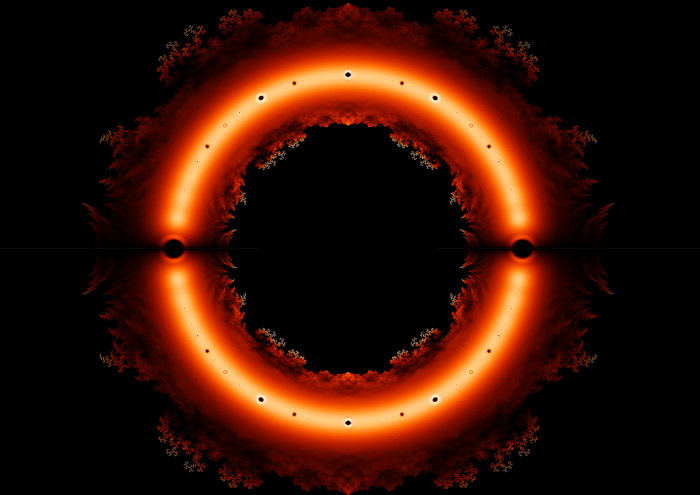

Then Sam Derbyshire joined in the game. After experimenting a bit, he decided that his favorite were polynomials whose coefficients were all 1 or -1. So, he computed all the roots of all the polynomials of this sort having degree 24. That’s 224 polynomials, and about 24 × 224 roots—or about 400 million roots! It took Mathematica four days to generate the coordinates of all these roots, producing about 5 gigabytes of data.

He then plotted all the roots using some Java programs, and created this amazing image:

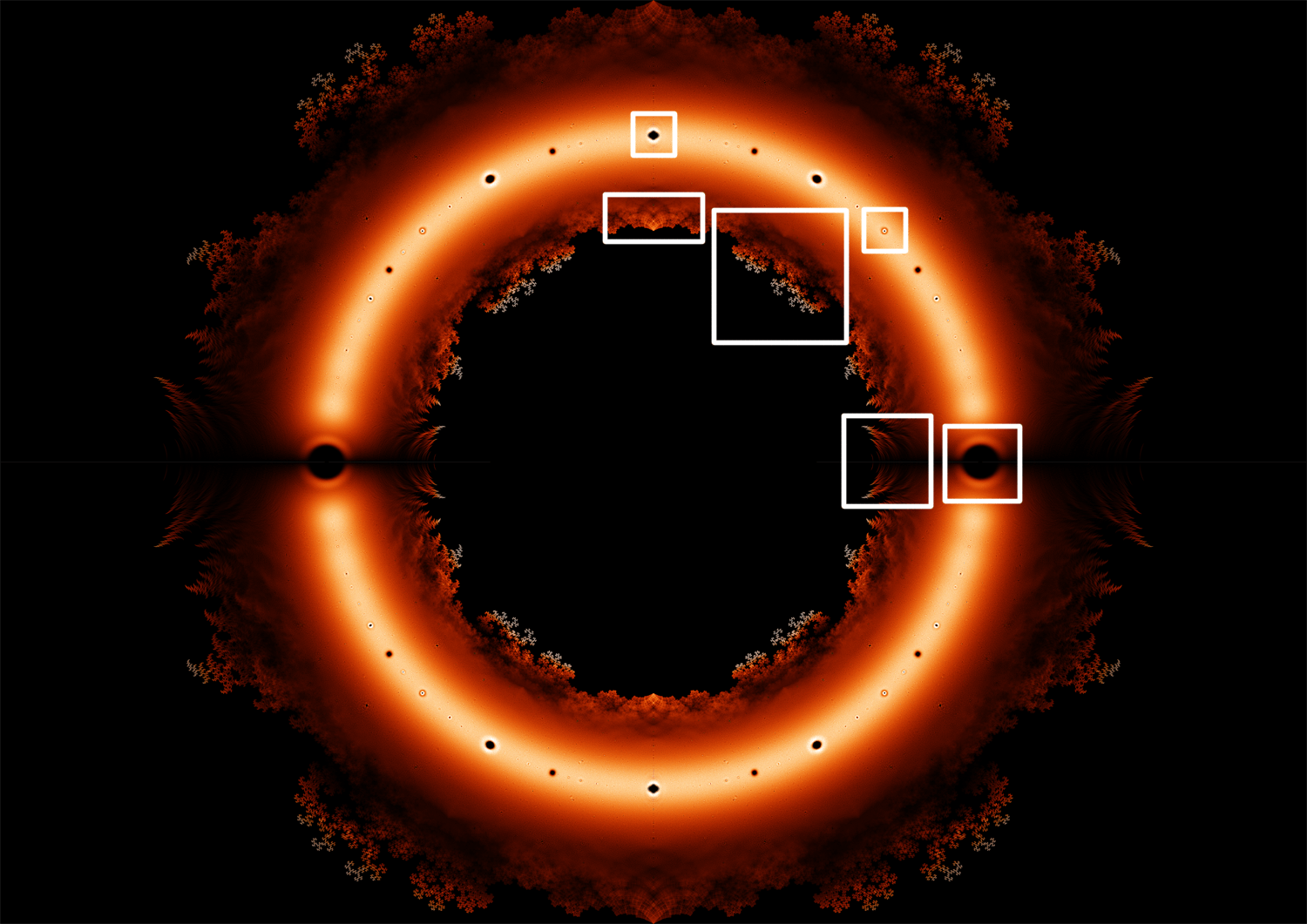

You really need to click on it and see a bigger version, to understand how nice it is. But Sam also zoomed in on specific locations, shown here:

Here’s a closeup of the hole at 1:

Note the line along the real axis! It’s oddly hard to see on my computer screen right now, but it’s there and it’s important. It exists because lots more of these polynomials have real roots than nearly real roots.

Next, here’s the hole at :

And here’s the hole at :

Note how the density of roots increases as we get closer to

this point, but then suddenly drops off right next to it. Note also the subtle patterns in the density of roots.

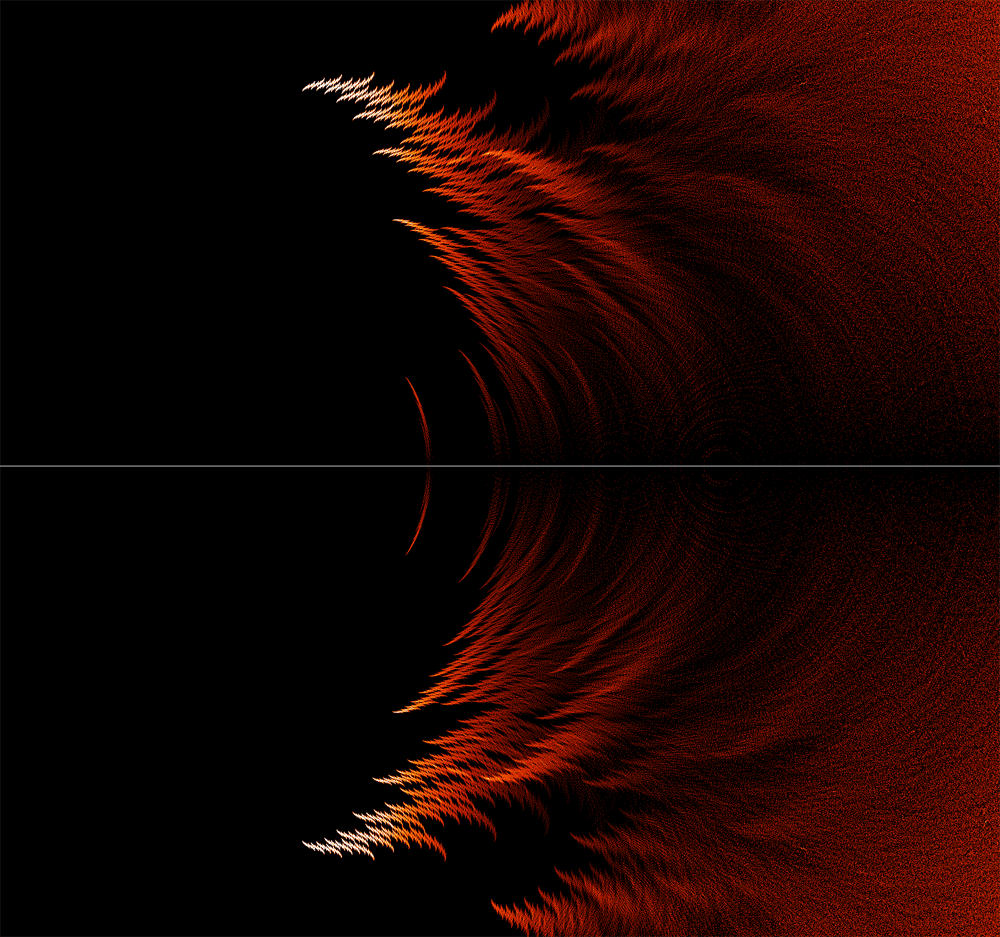

But the feathery structures as we move inside the unit circle are even more beautiful! Here is what they look near the real axis — this plot is centered at the point :

They have a very different character near the point :

But I think my favorite is the region near the point This image is almost a metaphor of how mathematical patterns emerge from confusion… like sharply defined figures looming from the mist as you drive by with your headlights on at night:

Now, you may remember that I said all this already in “week285” of This Week’s Finds. So why am I talking about it again?

Well, the patterns I’ve just showed you are tantalizing, and at first quite mysterious… but ‘some guy on the street’ and Greg Egan figured out how to understand some of them during the discussion of “week285”. The resulting story is quite beautiful! But this discussion was a bit hard to follow, since it involved smart people figuring out things as they went along. So, I doubt many people understood it—at least compared to the number of people who could understand it.

Let me just explain one pattern here. Why does this region near :

look so much like the fractal called a dragon?

You can create a dragon in various ways. In the animated image above, we’re starting with a single horizontal straight line segment (which is not shown for some idiotic reason) and repeatedly doing the same thing. Namely, at each step we’re replacing every segment by two shorter segments at right angles to each other:

At each step, we have a continuous curve. The dragon that appears in the limit of infinitely many steps is also a continuous curve. But it’s a space-filling curve: it has nonzero area!

Here’s another, more relevant way to create a dragon. Take these two functions from the complex plane to itself:

Pick a point in the plane and keep hitting it with either of these functions: you can randomly make up your mind which to use each time. No matter what choices you make, you’ll get a sequence of points that converges… and it converges to a point in the dragon! We can get all points in the dragon this way.

But where did these two functions come from? What’s so special about them?

To get the specific dragon I just showed you, we need these specific functions. They have the effect of taking the horizontal line segment from the point 0 to the point 1, and mapping it to the two segments that form the simple picture shown at the far left here:

As we repeatedly apply them, we get more and more segments, which form the ever more fancy curves in this sequence.

But if all we want is some sort of interesting set of points in the plane, we don’t need to use these specific functions. The most important thing is that our functions be contractions, meaning they reduce distances between points. Suppose we have two contractions and

from the plane to itself. Then there is a unique closed and bounded set

in the plane with

Moreover, suppose we start with some point in the plane and keep hitting it with

and/or

in any way we like. Then we’ll get a sequence that converges to a point in

And even better, every point in

show up as a limit of a sequence like this. We can even get them all starting from the same

All this follows from a famous theorem due to John Hutchinson.

“Cute,” you’re thinking. “But what does this have to do with roots of polynomials whose coefficients are all 1 or -1?”

Well, we can get polynomials of this type by starting with the number 0 and repeatedly applying these two functions, which depend on a parameter :

For example:

and so on. All these polynomials have constant term 1, never -1. But apart from that, we can get all polynomials with coefficients 1 or -1 using this trick. So, we get them all up to an overall sign—and that’s good enough for studying their roots.

Now, depending on what is, the functions

and

will give us different generalized dragon sets. We need

for these functions to be contraction mappings. Given that, we get a generalized dragon set in the way I explained. Let’s call it

to indicate that it depends on

.

Greg Egan drew some of these sets . Here’s one that looks like a dragon:

Here’s one that looks more like a feather:

Now here’s the devastatingly cool fact:

Near the point z in the complex plane, the set Sam Derbyshire drew looks a bit like the generalized dragon set Sz!

The words ‘a bit like’ are weasel words, because I don’t know the precise theorem. If you look at Sam’s picture again:

you’ll see a lot of ‘haze’ near the unit circle, which is where and

cease to be contraction mappings. Outside the unit circle—well, I don’t want to talk about that now! But inside the unit circle, you should be able to see that I’m at least roughly right. For example, if we zoom in near

, we get dragons:

which look at least roughly like this:

In fact they should look very much like this, but I’m too lazy to find the point and zoom in very closely to that point in Sam’s picture, to check!

Similarly, near the point , we get feathers:

that look a lot like this:

Again, it would be more convincing if I could exactly locate the point and zoom in there. But I think I can persuade Dan Christensen to do that for me.

Now there are lots of questions left to answer, like “What about all the black regions in the middle of Sam’s picture?” and “what about those funny-looking holes near the unit circle?” But the most urgent question is this:

If you take the set Sam Derbyshire drew and zoom in near the point z, why should it look like the generalized dragon set Sz?

And the answer was discovered by ‘some guy on the street’—our pseudonymous, nearly anonymous friend. It’s related to something called the Julia–Mandelbrot correspondence. I wish I could explain it clearly, but I don’t understand it well enough to do a really convincing job. So, I’ll just muddle through by copying Greg Egan’s explanation.

First, let’s define a Littlewood polynomial to be one whose coefficients are all 1 or -1.

We have already seen that if we take any number , then we get the image of

under all the Littlewood polynomials of degree

by starting with the point

and applying these functions over and over:

a total of times.

Moreover, we have seen that as we keep applying these functions over and over to , we get sequences that converge to points in the generalized dragon set

.

So, is the set of limits of sequences that we get by taking the number

and applying Littlewood polynomials of larger and larger degree.

Now, suppose 0 is in . Then there are Littlewood polynomials of large degree applied to

that come very close to 0. We get a picture like this:

where the arrows represent different Littlewood polynomials being applied to . If we zoom in close enough that a linear approximation is good, we can see what the inverse image of 0 will look like under these polynomials:

It will look the same! But these inverse images are just the roots of the Littlewood polynomials. So the roots of the Littlewood polynomials near will look like the generalized dragon set

.

As Greg put it:

But if we grab all these arrows:

and squeeze their tips together so that they all map precisely to 0—and if we’re working in a small enough neighbourhood of 0 that the arrows don’t really change much as we move them—the pattern that imposes on the tails of the arrows will look a lot like the original pattern:

There’s a lot more to say, but I think I’ll stop soon. I just want to emphasize that all this is modeled after the incredibly cool relationship between the Mandelbrot set and Julia sets. It goes like this;

Consider this function, which depends on a complex parameter :

If we fix this function defines a map from the complex plane to itself. We can start with any number

and keep applying this map over and over. We get a sequence of numbers. Sometimes this sequence shoots off to infinity and sometimes it doesn’t. The boundary of the set where it doesn’t is called the Julia set for this number

On the other hand, we can start with , and draw the set of numbers

for which the resulting sequence doesn’t shoot off to infinity. That’s called the Mandelbrot set.

Here’s the cool relationship: in the vicinity of the number the Mandelbrot set tends to look like the Julia set for that number

This is especially true right at the boundary of the Mandelbrot set.

For example, the Julia set for

looks like this:

while this:

is a tiny patch of the Mandelbrot set centered at the

same value of They’re shockingly similar!

This is why the Mandelbrot set is so complicated. Julia sets are

already very complicated. But the Mandelbrot set looks like a

lot of Julia sets! It’s like a big picture of someone’s face made of little pictures of different people’s faces.

Here’s a great picture illustrating this fact. As with all the pictures here, you can click on it for a bigger view:

But this one you really must click on!

So, the Mandelbrot set is like an illustrated catalog of Julia sets. Similarly, it seems the set of roots of Littlewood polynomials (up to a given degree) resembles a catalog of generalized dragon sets. However, making this into a theorem would require me to make precise many things I’ve glossed over—mainly because I don’t understand them very well!

So, I’ll stop here.

For references to earlier work on this subject, try “week285”.

Very nice! Maybe you already know this, but the way of drawing fractals that you describe is known as Iterated Function Systems (IFS):

http://en.wikipedia.org/wiki/Iterated_function_system

and here is a free program allowing you to play with them:

http://apophysis.org/

Sam

Yes, I made a link to that Wikipedia page on iterated function systems where I mentioned the famous theorem due to John Hutchinson, which says that there’s a unique closed and bounded set with

with

given a collection of contraction mappings .

.

I’ve never used Apophysis or seen it used!

Hi Sam, I’m trying to re-create the image but can not make it as beautiful as yours, I think the problem is with my coloring scheme. Could you explain me how did you color the roots?

I coloured each pixel depending on how many roots landed in that 1pixel x 1pixel square. You can try various gradients, mine goes from white (for highest density) to yellow to orange to black (for 0 density). It’s important to have the resolution of the image match the number of roots: if you have too few roots for a given resolution, pixels would tend to not contain enough roots, and you can’t do much with that with the colouring.

Mind = Blown

Thanks!

Excellent post, tyvm for breaking it down for us lay people. Most appreciated.

You’re welcome. Mathematicians shouldn’t keep all the fun for themselves.

Out of curiousity, was the first Julia set (and the second one, for that matter) produced using Mathematica?

I think so, but I’m not sure: Greg can answer that!

Yes, those Julia sets were produced with Mathematica.

” He then plotted all the roots using some Java programs, and created this amazing image”

John – any idea/specifics on the Java programs used for this kind of thing?

(By the way: long time listener, first time caller. Enjoy your blog)

Thanks! I don’t know what software Sam Derbyshire used, but I think we’re planning to write a paper on this, so I’ll find out.

It really wasn’t anything fancy, and probably very clumsy and thus took ages to compute.

A Mathematica notebook computed the roots of all these polynomials, and output the data to several files (just storing the coordinates of the roots). Then a few Java scripts written by a friend (Chris Smith) converted this data to a PNG image (with customisable colours).

Using python and scipy, and carefully taking into account the 8-fold symmetry, I can generate the degree 24 roots in about 3 hours, and plot them in about 10 minutes. I store about 55 million roots (again using symmetry).

Here’s a movie Greg Egan created:

It shows subsets of the Derbyshire set of order 16: that is, the roots of polynomials of degree 16 whose coefficients are all 1 or -1. Each frame shows the roots of such polynomials with a particular number of coefficients equal to 1.

Wow what a neat Christmas gift! Sage has a page on their

wiki with several fractal examples:

http://wiki.sagemath.org/interact/fractal

Over on Google+, Sjoerd Visscher wrote:

Dan Christensen asked for details, and Sjoerd said:

John, i tried to use your equation and render my own image at high resolution (A0 poster) but if i calculate just the values for the equation it look very different, I also hat to use a factor like 1e12 or so to even get in the color range (I used plain red in RGB, so values form 0..255, just for the first try):

In the region from -1..1 and -i..i. With

What did i do wrong?

Sorry, you’d have to ask Sam Derbyshire to find out how he made the images! I guess he used some tricks to make them look good.

[…] https://johncarlosbaez.wordpress.com/2011/12/11/the-beauty-of-roots/ […]

Over on Google+, I wrote:

Here is an image created by +Dan Christensen. If you compute all the roots of all degree-24 polynomials with coefficients ±1, and draw the roots that lie near the point 2/3 + i/10, you’ll get a picture like this. The colors are brighter for pixels with more roots in them. Click to enlarge:

Chris Foster:

Carlos Scheidegger:

Chris Foster:

Dan Christensen:

John Douglas Porter:

Carlos Scheidegger;

Linas Vepstas:

John Douglas Porter:

Chris Foster:

Linas Vepstas:

Chris Foster:

John Baez:

John Baez :

John Baez:

Linas Vepstas:

Dan Christensen:

Linas Vepstas;

Linas Vepstas:

Chris Foster:

Linas Vepstas:

Dan Christensen:

Chris Foster:

John Baez:

Chris Foster:

Carlos Scheidegger:

Chris Foster:

Dan Christensen:

Chris Foster:

Sam Derbyshire:

John Baez:

Above you’ll read how Chris Foster found a blazingly fast way to explore the beauty of roots. He has kindly allowed me to show you some of his results here.

Here is Chris Foster’s picture of the roots of degree-20 polynomials with coefficients ±1 in the rectangle [1,1.7]x[0,0.7] in the complex plane. Click to see the full 4000×4000 pixel version!

Later he went further and explored degree-28 polynomials with coefficients ±1. Here are the roots of such polynomials in a region of width 0.001, centered at the point 1.08+ 1.08i in the complex plane:

After more optimization he went up to degree 42! Here are all the roots of all degree-42 polynomials with coefficients ±1 in a region of width 0.00005 centered at the point 1.460126+0.200216i:

(Actually I cropped this picture to remove some of the black region, so the description I just gave isn’t quite right. If the detailed numbers matter to you, click on the picture and get the real thing.)

I think the pattern of white dots here is very charming!

The white dots are the actual roots. I think the orange background around them is just an artifact of the imaging technique. So this is really the familiar “feather” pattern again.

I wrote a Java applet that lets you view the Littlewood roots interactively.

http://www.gregegan.net/SCIENCE/Littlewood/Littlewood.html

This limits the view to a radius of 0.8, and shows a default order of 20, but it can display up to order 36 if you select a smaller view that excludes the dense part of the ring of roots.

The method I’m using is not quite pixel-perfect: a single root will occasionally be displayed in two adjacent pixels rather than just one.

I added a facility to the applet that lets you see the “Julia set” for any point.

Here’s a movie of the changing “dragons” as you move around the root set.

[…] a bit more on the beauty of roots—some things that may have escaped you if you weren’t following this blog […]

[…] excellent post by John Baez restored my faith in google+ and in blogging […]

Ooh… Shiny. This is why I love math.

John Baez:

Nice post, very nice, I draw also something for a student with a source code

And maybe you are interested in the graph of the polynomials in R2 to understand better what is happening in the closure.

https://drive.google.com/open?id=0ByHH63g30hjwX3lUUnl4YkhKQXM&authuser=0

Here are all the pictures and source code

https://drive.google.com/open?id=0ByHH63g30hjwa29QVmRTU0tENTQ&authuser=0

Eduardo Ruiz Duarte

I am being paid to make a web site for someone, and would like to use this as the background. Could you link to this Sam Derbyshire?

If the email address you used in your comment is valid, I can send Sam your comment and your email address. Or, perhaps this email address will work for him.

I’ve emailed him, thank you for the link!

Bohemian eigenvalues are the distribution of the eigenvalues of a random matrix. More specifically, this article explores matrices of low dimension (typically no larger than 10 × 10) whose entries are integers of bounded height. The name “Bohemian” is intended as a mnemonic and is derived from “Bounded Height Integer Matrix Eigenvalues” (BHIME). Here I present an overview of some preliminary results arising from this project.

I know I’m commenting very late, but I love this post and am sad to say all the images hosted on math.ucr.edu have vanished (along with the mirror that was there too).

The server hosting math.ucr.edu went down about 3 weeks ago due to a power outage, along with all other computers at U. C. Riverside. Unfortunately this particular server was unable to reboot so they had to replace it. The machine has been replaced, but only yesterday was I able to log in and tell them where in the directory hierarchy my website was located, so they could make it visible to the world. They are trying to do that now. So, in a few hours, or days, or weeks, my website and all the images it hosts should reappear.

math.ucr.edu is back up! If anyone experiences any problems with it—e.g., missing files—please let me know.