Greg Bernhardt runs an excellent website for discussing physics, math and other topics, called Physics Forums. He recently interviewed me there. Since I used this opportunity to explain a bit about the Azimuth Project and network theory, I thought I’d reprint the interview here. Here is Part 1.

Give us some background on yourself.

I’m interested in all kinds of mathematics and physics, so I call myself a mathematical physicist. But I’m a math professor at the University of California in Riverside. I’ve taught here since 1989. My wife Lisa Raphals got a job here nine years later: among other things, she studies classical Chinese and Greek philosophy.

I got my bachelors’s degree in math at Princeton. I did my undergrad thesis on whether you can use a computer to solve Schrödinger’s equation to arbitrary accuracy. In the end, it became obvious that you can. I was really interested in mathematical logic, and I used some in my thesis—the theory of computable functions—but I decided it wasn’t very helpful in physics. When I read the magnificently poetic last chapter of Misner, Thorne and Wheeler’s Gravitation, I decided that quantum gravity was the problem to work on.

I went to math grad school at MIT, but I didn’t find anyone to work with on quantum gravity. So, I did my thesis on quantum field theory with Irving Segal. He was one of the founders of “constructive quantum field theory”, where you try to rigorously prove that quantum field theories make mathematical sense and obey certain axioms that they should. This was a hard subject, and I didn’t accomplish much, but I learned a lot.

I got a postdoc at Yale and switched to classical field theory, mainly because it was something I could do. On the side I was still trying to understand quantum gravity. String theory was bursting into prominence at the time, and my life would have been easier if I’d jumped onto that bandwagon. But I didn’t like it, because most of the work back then studied strings moving on a fixed “background” spacetime. Quantum gravity is supposed to be about how the geometry of spacetime is variable and quantum-mechanical, so I didn’t want a theory of quantum gravity set on a pre-existing background geometry!

I got a professorship at U.C. Riverside based on my work on classical field theory. But at a conference on that subject in Seattle, I heard Abhay Ashtekar, Chris Isham and Renate Loll give some really interesting talks on loop quantum gravity. I don’t know why they gave those talks at a conference on classical field theory. But I’m sure glad they did! I liked their work because it was background-free and mathematically rigorous. So I started work on loop quantum gravity.

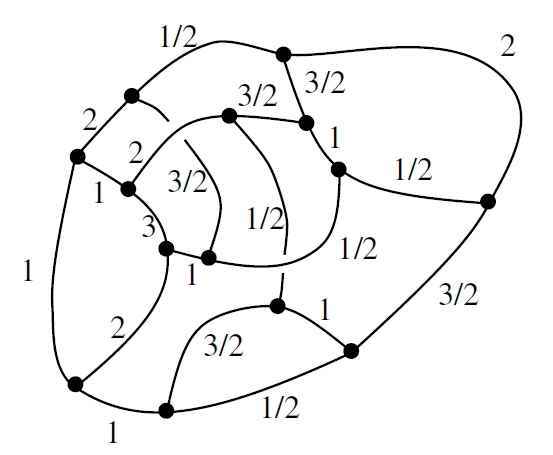

Like many other theories, quantum gravity is easier in low dimensions. I became interested in how category theory lets you formulate quantum gravity in a universe with just 3 spacetime dimensions. It amounts to a radical new conception of space, where the geometry is described in a thoroughly quantum-mechanical way. Ultimately, space is a quantum superposition of “spin networks”, which are like Feynman diagrams. The idea is roughly that a spin network describes a virtual process where particles move around and interact. If we know how likely each of these processes is, we know the geometry of space.

Loop quantum gravity tries to do the same thing for full-fledged quantum gravity in 4 spacetime dimensions, but it doesn’t work as well. Then Louis Crane had an exciting idea: maybe 4-dimensional quantum gravity needs a more sophisticated structure: a “2-category”.

I had never heard of 2-categories. Category theory is about things and processes that turn one thing into another. In a 2-category we also have “meta-processes” that turn one process into another.

I became very excited about 2-categories. At the time I was so dumb I didn’t consider the possibility of 3-categories, and 4-categories, and so on. To be precise, I was more of a mathematical physicist than a mathematician: I wasn’t trying to develop math for its own sake. Then someone named James Dolan told me about n-categories! That was a real eye-opener. He came to U.C. Riverside to work with me. So I started thinking about n-categories in parallel with loop quantum gravity.

Dolan was technically my grad student, but I probably learned more from him than vice versa. In 1996 we wrote a paper called “Higher-dimensional algebra and topological quantum field theory”, which might be my best paper. It’s full of grandiose guesses about n-categories and their connections to other branches of math and physics. We had this vision of how everything fit together. It was so beautiful, with so much evidence supporting it, that we knew it had to be true. Unfortunately, at the time nobody had come up with a good definition of n-category, except for n < 4. So we called our guesses “hypotheses” instead of “conjectures”. In math a conjecture should be something utterly precise: it’s either true or not, with no room for interpretation.

By now, I think everybody more or less believes our hypotheses. Some of the easier ones have already been turned into theorems. Jacob Lurie, a young hotshot at Harvard, improved the statement of one and wrote a 111-page outline of a proof. Unfortunately he still used some concepts that hadn’t been defined. People are working to fix that, and I feel sure they’ll succeed.

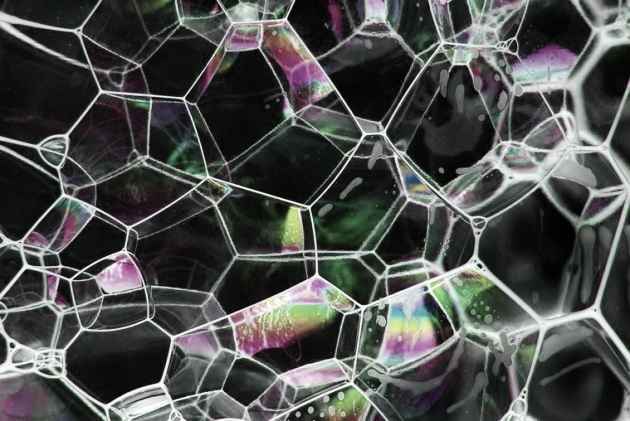

Anyway, I kept trying to connect these ideas to quantum gravity. In 1997, I introduced “spin foams”. These are structures like spin networks, but with an extra dimension. Spin networks have vertices and edges. Spin foams also have 2-dimensional faces: imagine a foam of soap bubbles.

The idea was to use spin foams to give a purely quantum-mechanical description of the geometry of spacetime, just as spin networks describe the geometry of space. But mathematically, what we’re doing here is going from a category to a 2-category.

By now, there are a number of different theories of quantum gravity based on spin foams. Unfortunately, it’s not clear that any of them really work. In 2002, Dan Christensen, Greg Egan and I did a bunch of supercomputer calculations to study this question. We showed that the most popular spin foam theory at the time gave dramatically different answers than people had hoped for. I think we more or less killed that theory.

That left me rather depressed. I don’t enjoy programming: indeed, Christensen and Egan did all the hard work of that sort on our paper. I didn’t want to spend years sifting through spin foam theories to find one that works. And most of all, I didn’t want to end up as an old man still not knowing if my work had been worthwhile! To me n-category theory was clearly the math of the future—and it was easy for me to come up with cool new ideas in that subject. So, I quit quantum gravity and switched to n-categories.

But this was very painful. Quantum gravity is a kind of “holy grail” in physics. When you work on that subject, you wind up talking to lots of people who believe that unifying quantum mechanics and general relativity is the most important thing in the world, and that nothing else could possibly be as interesting. You wind up believing it. It took me years to get out of that mindset.

Ironically, when I quit quantum gravity, I felt free to explore string theory. As a branch of math, it’s really wonderful. I started looking at how n-categories apply to string theory. It turns out there’s a wonderful story here: briefly, particles are to categories as strings are to 2-categories, and all the math of particles can be generalized to strings using this idea! I worked out a bit of this story with Urs Schreiber and John Huerta.

Around 2010, I felt I had to switch to working on environmental issues and math related to engineering and biology, for the sake of the planet. That was another painful renunciation. But luckily, Urs Schreiber and others are continuing to work on n-categories and string theory, and doing it better than I ever could. So I don’t feel the need to work on those things anymore—indeed, it would be hard to keep up. I just follow along quietly from the sidelines.

It’s quite possible that we need a dozen more good ideas before we really get anywhere on quantum gravity. But I feel confident that n-categories will have a role to play. So, I’m happy to have helped push that subject forward.

Your uncle, Albert Baez, was a physicist. How did he help develop your interests?

He had a huge effect on me. He’s mainly famous for being the father of the folk singer Joan Baez. But he started out in optics and helped invent the first X-ray microscope. Later he became very involved in physics education, especially in what were then called third-world countries. For example, in 1951 he helped set up a physics department at the University of Baghdad.

When I was a kid he worked for UNESCO, so he’d come to Washington D.C. and stay with my parents, who lived nearby. Whenever he showed up, he would open his suitcase and pull out amazing gadgets: diffraction gratings, holograms, and things like that. And he would explain how they work! I decided physics was the coolest thing there is.

When I was eight, he gave me a copy of his book The New College Physics: A Spiral Approach. I immediately started trying to read it. The “spiral approach” is a great pedagogical principle: instead of explaining each topic just once, you should start off easy and then keep spiraling around from topic to topic, examining them in greater depth each time you revisit them. So he not only taught me physics, he taught me about how to learn and how to teach.

Later, when I was fifteen, I spent a couple weeks at his apartment in Berkeley. He showed me the Lawrence Hall of Science, which is where I got my first taste of programming—in BASIC, with programs stored on paper tape. This was in 1976. He also gave me a copy of The Feynman Lectures on Physics. And so, the following summer, when I was working at a state park building trails and such, I was also trying to learn quantum mechanics from the third volume of The Feynman Lectures. The other kids must have thought I was a complete geek—which of course I was.

Give us some insight on what your average work day is like.

During the school year I teach two or three days a week. On days when I teach, that’s the focus of my day: I try to prepare my classes starting at breakfast. Teaching is lots of fun for me. Right now I’m teaching two courses: an undergraduate course on game theory and a graduate course on category theory. I’m also running a seminar on category theory. In addition, I meet with my graduate students for a four-hour session once a week: they show me what they’ve done, and we try to push our research projects forward.

On days when I don’t teach, I spend a lot of time writing. I love blogging, so I could easily do that all day, but I try to spend a lot of time writing actual papers. Any given paper starts out being tough to write, but near the end it practically writes itself. At the end, I have to tear myself away from it: I keep wanting to add more. At that stage, I feel an energetic glow at the end of a good day spent writing. Few things are so satisfying.

During the summer I don’t teach, so I can get a lot of writing done. I spent two years doing research at the Centre of Quantum Technologies, which is in Singapore, and since 2012 I’ve been working there during summers. Sometimes I bring my grad students, but mostly I just write.

I also spend plenty of time doing things with my wife, like talking, cooking, shopping, and working out at the gym. We like to watch TV shows in the evening, mainly mysteries and science fiction.

We also do a lot of gardening. When I was younger that seemed boring but as you get older, subjective time speeds up, so you pay more attention to things like plants growing. There’s something tremendously satisfying about planting a small seedling, watching it grow into an orange tree, and eating its fruit for breakfast.

I love playing the piano and recording electronic music, but doing it well requires big blocks of time, which I don’t always have. Music is pure delight, and if I’m not listening to it I’m usually composing it in my mind.

If I gave in to my darkest urges and becames a decadent wastrel I might spend all day blogging, listening to music, recording music and working on pure math. But I need other things to stay sane.

What research are you working on at the moment?

Lately I’ve been trying to finish a paper called “Struggles with the Continuum”. It’s about the problems physics has with infinities, due to the assumption that spacetime is a continuum. At certain junctures this paper became psychologically difficult to write, since it’s supposed to include a summary of quantum field theory, which is complicated and sprawling subject. So, I’ve resorted to breaking this paper into blog articles and posting them on Physics Forums, just to motivate myself.

Purely for fun, I’ve been working with Greg Egan on some projects involving the octonions. The octonions are a number system where you can add, subtract, multiply and divide. Such number systems only exist in 1, 2, 4, and 8 dimensions: you’ve got the real numbers, which form a line, the complex numbers, which form a plane, the quaternions, which are 4-dimensional, and the octonions, which are 8dimensional. The octonions are the biggest, but also the weirdest. For example, multiplication of octonions violates the associative law: (xy)z is not equal to x(yz). So the octonions sound completely crazy at first, but they turn out to have fascinating connections to string theory and other things. They’re pretty addictive, and if became a decadent wastrel I would spend a lot more time on them.

There’s a concept of “integer” for the octonions, and integral octonions form a lattice, a repeating pattern of points, in 8 dimensions. This is called the E8 lattice. There’s another lattices that lives in in 24 dimensions, called the “Leech lattice”. Both are connected to string theory. Notice that 8+2 equals 10, the dimension superstrings like to live in, and 24+2 equals 26, the dimension bosonic strings like to live in. That’s not a coincidence! The 2 here comes from the 2-dimensional world-sheet of the string.

Since 3×8 is 24, Egan and I became interested in how you could built the Leech lattice from 3 copies of the E8 lattice. People already knew a trick for doing it, but it took us a while to understand how it worked—and then Egan showed you could do this trick in exactly 17,280 ways! I want to write up the proof. There’s a lot of beautiful geometry here.

There’s something really exhilarating about struggling to reach the point where you have some insight into these structures and how they’re connected to physics.

My main work, though, involves using category theory to study networks. I’m interested in networks of all kinds, from electrical circuits to neural networks to “chemical reaction networks” and many more. Different branches of science and engineering focus on different kinds of networks. But there’s not enough communication between researchers in different subjects, so it’s up to mathematicians to set up a unified theory of networks.

I’ve got seven grad students working on this project—or actually eight, if you count Brendan Fong: I’ve been helping him on his dissertation, but he’s actually a student at Oxford.

Brendan was the first to join the project. I wanted him to work on electrical circuits, which are a nice familiar kind of network, a good starting point. But he went much deeper: he developed a general category-theoretic framework for studying networks. We then applied it to electrical circuits, and other things as well.

Blake Pollard is a student of mine in the physics department here at U. C. Riverside. Together with Brendan and me, he developed a category-theoretic approach to Markov processes: random processes where a system hops around between different states. We used Brendan’s general formalism to reduce Markov processes to electrical circuits. Now Blake is going further and using these ideas to tackle chemical reaction networks.

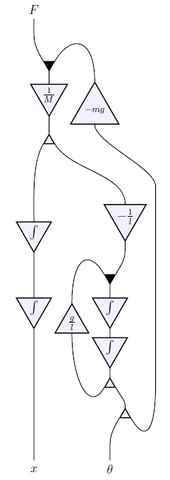

My other students are in the math department at U. C. Riverside. Jason Erbele is working on “control theory”, a branch of engineering where you try to design feedback loops to make sure processes run in a stable way. Control theory uses networks called “signal flow diagrams”, and Jason has worked out how to understand these using category theory.

Jason isn’t using Brendan’s framework: he’s using a different one, called PROPs, which were developed a long time ago for use in algebraic topology. My student Franciscus Rebro has been developing it further, for use in our project. It gives a nice way to describe networks in terms of their basic building blocks. It also illuminates the similarity between signal flow diagrams and Feynman diagrams! They’re very similar, but there’s a big difference: in signal flow diagrams the signals are classical, while Feynman diagrams are quantum-mechanical.

My student Brandon Coya has been working on electrical circuits. He’s sort of continuing what Brendan started, and unifying Brendan’s formalism with PROPs.

My student Adam Yassine is starting to work on networks in classical mechanics. In classical mechanics you usually consider a single system: you write down the Hamiltonian, you get the equations of motion, and you try to solve them. He’s working on a setup where you can take lots of systems and hook them up into a network.

My students Kenny Courser and Daniel Cicala are digging deeper into another aspect of network theory. As I hinted earlier, a category is about things and processes that turn one thing into another. In a 2-category we also have “meta-processes” that turn one process into another. We’re starting to bring 2-categories into network theory.

For example, you can use categories to describe an electrical circuit as a process that turns some inputs into some outputs. You put some currents in one end and some currents come out the other end. But you can also use 2-categories to describe “meta-processes” that turn one electrical circuit into another. An example of a meta-process would be a way of simplifying an electrical circuit, like replacing two resistors in series by a single resistor.

Ultimately I want to push these ideas in the direction of biochemistry. Biology seems complicated and “messy” to physicists and mathematicians, but I think there must be a beautiful logic to it. It’s full of networks, and these networks change with time. So, 2-categories seem like a natural language for biology.

It won’t be easy to convince people of this, but that’s okay.

Thanks for posting this here John – I found it by turns informative, entertaining and inspiring (and a bit suspenseful; I’m left wondering what will be in Part 2:-)

Thanks! I’ll post part 2 on Monday.

This is a fascinating story. In theory I already knew a lot of your history “as it happened”, but it’s good to have a “long view” which gives a different perspective.

Thanks! Yes, you know me pretty well—and for that very reason, I never told you my whole life story all at once.

It was fun having an opportunity to think about it. It made me both happy and frustrated.

Does 17280 come from (4!)(6!), or , or something more complicated?

, or something more complicated?

This is a dangerously fun question. I’ll try to keep my answer brief.

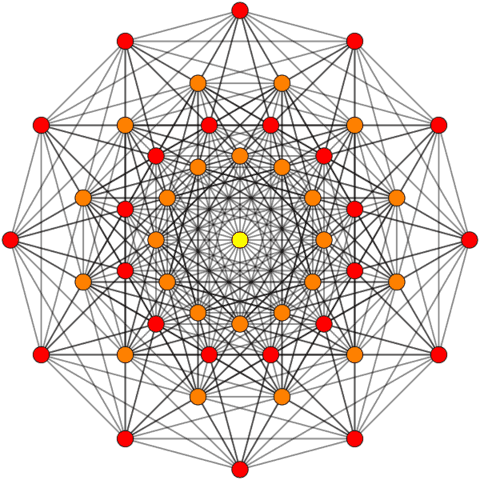

In 8 dimensions, when you pack spheres in the densest possible lattice, you get the ‘E8 lattice’. Each sphere has a bunch of nearest neighbors. It has even more ‘second nearest neighbors’.

The centers of the nearest neighbors are the corners of a beautiful jewel—the 8-dimensional analogue of this:

If you expand this jewel and rotate it just right, you can make its corners lie at the centers of some of the second nearest neighbors! There are exactly 270 ways to do this.

To build a copy of the Leech lattice from three copies of the E8 lattice, you need to choose two disjoint ways to do this. That is, none of the corners of your first expanded, rotated jewel can be corners of the second expanded, rotated jewel.

There are 270 ways to pick the first expanded, rotated jewel, and for each of these there are 64 ways to pick the second, disjoint one.

270 times 64 is 17,280.

So, I’d say the ‘explanation’ of the number 17,280 is this:

I think you need to give yourself more credit for bringing together people from different intellectual fields and thereby catalysing the work of others. You do this through online blogs and forums (and I checked part 2 so I know you mention it a little there). I applaud you for that. I am sure there must be a good joke about a studying networks by networking on the internet, but punchlines are not my forte.

My professional life has mostly been concerned with models of transportation networks (highways, railways etc) and the way people use them. I am obviously convinced that networks (and stochastic networks) turn up everywhere. I am interested understand when you frame your analysis in terms of categories and n-categories but feel alienated when you push the analogies to quantum mechanics too forcefully.

Thank you for the work.

Roger, I peeked at part 2, and appreciate more than ever the potential for scientific creativity that the Azimuth Project has.

Regarding stochastic models of transportation, I have written about it here

http://mobjectivist.blogspot.com/2010/04/dispersion-and-train-delays.html

Train switching is indeed a network and it would be interesting to work out to see if it makes much difference in the limit.

You’re doing really interesting stuff on the Azimuth Project, Paul. I wish I could lure you into posting a kind of summary or progress report on the Azimuth Blog. There’s a standard procedure for doing this. I think it would get your work more attention.

Thanks John, I’m working at finding a home for the white paper I wrote on modeling QBO. This is a link to a markup rendered as a high-res PNG:

http://ContextEarth.com/2015/10/22/pukites-model-of-the-quasi-biennial-oscillation/qbo_paper/

I tried placing a PDF version on ARXIV as part of the process of submitting to a journal, but it was removed by the admins for reasons explained to me in an email.

Paul, neat analysis feom a user perspective. I have not done that much work on trains – just 16 months at London Underground but I can fill in spme gaps for you

Trains do, indeed, obey speed limits. Each point on the track has a maximum speed so that we can guarantee the train won’t derail. The driver must ensure that his train does not exceed the lowest speed limit under his wheels (so the speed profile of the journey depends on the length of the train).

On the underground trains also have limits (both upper and lower) on acceleration and jerk (fourth derivative of position with time). These limits are set because people stand on trains and we don’t want to knock them over.

However train performance on the underground is pretty uniform and variations in driver behaviour ore also fairly small. The main variability comes from the ‘dwell time’ ie the time between wheel stop and wheel start. This is the period when passengers are boarding and alighting. It depends on the number of passengers going through the doors and the distribution of passengers along the length of the train (although the door mechanism imposes a lower bound).

Once delays due to dwell get into the system you can get positive feedback. If you assue passenger arrivals per unit time are fairly even, the increased gap between services means that more passengers will be getting on the delayed train. Meanwhile there will be fewer passengers in the gap behind the delayed train, so it wants to go faster. (This logic also applies to buses and explains why you wait ages for a bus and then three turn up at once). The only way to get the service back on an even keel is to deliberately introduce extra delay for the vehicle at the rear.

Thanks Roger. Yes, the reason I created a PDF in that form was because of the maximum speed imposed on the trains. Your speed limit rationale is apt.

On the other end, I recall leaving Gatwck Airport in a train that crept along slower than walking pace for awhile.

Agree that London Underground doesn’t have the variation that you find in the country. Also you probably won’t find that variation in Switzerland where from my experience the arrival times are very tight between cities.

The modeling overall is an interesting mix of stochastic and deterministic elements. The scheduling imposes a regularity that eventually cause the randomness to eventually sync up again. Somewhere in between semi-Markov and jitter-based stochastic modeling.

Roger wrote:

Thanks. I actually feel like a bit of a failure in this respect, because my interests keep changing, so while I keep bringing people together, I keep leaving these people behind. I recently looked back at an “Addendum” to one of the issues of This Week’s Finds — “week234”, to be precise. I was impressed by the crowd of good mathematicians who wrote fascinating comments. Those people are largely lost to me now: apparently they’re not interested in Azimuth. That makes me feel sad. If I’d treated ‘building a community’ as a goal worth pursuing for its own sake, and put time and thought into it, I could have done a lot better.

I got really excited when I noticed a strange analogy between probability theory and quantum mechanics — an analogy that makes no physical sense, because it treats amplitudes as analogous to probabilities. I noticed this when learning about chemical reaction networks: the mathematics of these is closely analogous to what you see in quantum field theory. I got really excited, then I discovered other people had done a lot of work on this analogy but somehow hadn’t clarified it or underscored its strangeness. I wrote a book about it with Jacob Biamonte. But now I’m largely done with that.

That was me first dipping my toe into network theory, starting around 2010. Since quantum field theory was something I knew well, it was exciting to see the same math showing up in chemical reaction network theory. But now I have a bigger picture of network theory, and this analogy is not the main point — although I still believe it’s profoundly mysterious and need to be understood better.

Thank you! All your comments on this blog are interesting, and I’m grateful for them.

[…] a wonderful interview with John Baez in two parts, here and […]

[…] In this work we give the complete theory and numerous references to work of other people that was along the same lines. We employ a “spiral” approach to the presentation of the results, inspired by the pedagogical principle of Albert Baez. […]

[…] In this work we give the complete theory and numerous references to work of other people that was along the same lines. We employ a “spiral” approach to the presentation of the results, inspired by the pedagogical principle of Albert Baez. […]