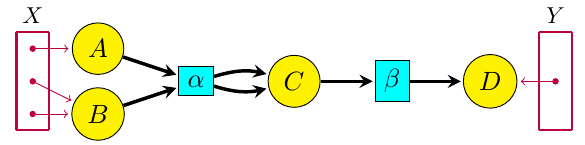

For a long time Blake Pollard and I have been working on ‘open’ chemical reaction networks: that is, networks of chemical reactions where some chemicals can flow in from an outside source, or flow out. The picture to keep in mind is something like this:

where the yellow circles are different kinds of chemicals and the aqua boxes are different reactions. The purple dots in the sets X and Y are ‘inputs’ and ‘outputs’, where certain kinds of chemicals can flow in or out.

Here’s our paper on this stuff:

• John Baez and Blake Pollard, A compositional framework for reaction networks, Reviews in Mathematical Physics 29 (2017), 1750028.

Blake and I gave talks about this stuff in Luxembourg this June, at a nice conference called Dynamics, thermodynamics and information processing in chemical networks. So, if you’re the sort who prefers talk slides to big scary papers, you can look at those:

• John Baez, The mathematics of open reaction networks.

• Blake Pollard, Black-boxing open reaction networks.

But I want to say here what we do in our paper, because it’s pretty cool, and it took a few years to figure it out. To get things to work, we needed my student Brendan Fong to invent the right category-theoretic formalism: ‘decorated cospans’. But we also had to figure out the right way to think about open dynamical systems!

In the end, we figured out how to first ‘gray-box’ an open reaction network, converting it into an open dynamical system, and then ‘black-box’ it, obtaining the relation between input and output flows and concentrations that holds in steady state. The first step extracts the dynamical behavior of an open reaction network; the second extracts its static behavior. And both these steps are functors!

Lawvere had the idea that the process of assigning ‘meaning’ to expressions could be seen as a functor. This idea has caught on in theoretical computer science: it’s called ‘functorial semantics’. So, what we’re doing here is applying functorial semantics to chemistry.

Now Blake has passed his thesis defense based on this work, and he just needs to polish up his thesis a little before submitting it. This summer he’s doing an internship at the Princeton branch of the engineering firm Siemens. He’s working with Arquimedes Canedo on ‘knowledge representation’.

But I’m still eager to dig deeper into open reaction networks. They’re a small but nontrivial step toward my dream of a mathematics of living systems. My working hypothesis is that living systems seem ‘messy’ to physicists because they operate at a higher level of abstraction. That’s what I’m trying to explore.

Here’s the idea of our paper.

The idea

Reaction networks are a very general framework for describing processes where entities interact and transform int other entities. While they first showed up in chemistry, and are often called ‘chemical reaction networks’, they have lots of other applications. For example, a basic model of infectious disease, the ‘SIRS model’, is described by this reaction network:

We see here three types of entity, called species:

• : susceptible,

• : infected,

• : resistant.

We also have three `reactions’:

• : infection, in which a susceptible individual meets an infected one and becomes infected;

• : recovery, in which an infected individual gains resistance to the disease;

• : loss of resistance, in which a resistant individual becomes susceptible.

In general, a reaction network involves a finite set of species, but reactions go between complexes, which are finite linear combinations of these species with natural number coefficients. The reaction network is a directed graph whose vertices are certain complexes and whose edges are called reactions.

If we attach a positive real number called a rate constant to each reaction, a reaction network determines a system of differential equations saying how the concentrations of the species change over time. This system of equations is usually called the rate equation. In the example I just gave, the rate equation is

Here and

are the rate constants for the three reactions, and

now stand for the concentrations of the three species, which are treated in a continuum approximation as smooth functions of time:

The rate equation can be derived from the law of mass action, which says that any reaction occurs at a rate equal to its rate constant times the product of the concentrations of the species entering it as inputs.

But a reaction network is more than just a stepping-stone to its rate equation! Interesting qualitative properties of the rate equation, like the existence and uniqueness of steady state solutions, can often be determined just by looking at the reaction network, regardless of the rate constants. Results in this direction began with Feinberg and Horn’s work in the 1960’s, leading to the Deficiency Zero and Deficiency One Theorems, and more recently to Craciun’s proof of the Global Attractor Conjecture.

In our paper, Blake and I present a ‘compositional framework’ for reaction networks. In other words, we describe rules for building up reaction networks from smaller pieces, in such a way that its rate equation can be figured out knowing those those of the pieces. But this framework requires that we view reaction networks in a somewhat different way, as ‘Petri nets’.

Petri nets were invented by Carl Petri in 1939, when he was just a teenager, for the purposes of chemistry. Much later, they became popular in theoretical computer science, biology and other fields. A Petri net is a bipartite directed graph: vertices of one kind represent species, vertices of the other kind represent reactions. The edges into a reaction specify which species are inputs to that reaction, while the edges out specify its outputs.

You can easily turn a reaction network into a Petri net and vice versa. For example, the reaction network above translates into this Petri net:

Beware: there are a lot of different names for the same thing, since the terminology comes from several communities. In the Petri net literature, species are called places and reactions are called transitions. In fact, Petri nets are sometimes called ‘place-transition nets’ or ‘P/T nets’. On the other hand, chemists call them ‘species-reaction graphs’ or ‘SR-graphs’. And when each reaction of a Petri net has a rate constant attached to it, it is often called a ‘stochastic Petri net’.

While some qualitative properties of a rate equation can be read off from a reaction network, others are more easily read from the corresponding Petri net. For example, properties of a Petri net can be used to determine whether its rate equation can have multiple steady states.

Petri nets are also better suited to a compositional framework. The key new concept is an ‘open’ Petri net. Here’s an example:

The box at left is a set X of ‘inputs’ (which happens to be empty), while the box at right is a set Y of ‘outputs’. Both inputs and outputs are points at which entities of various species can flow in or out of the Petri net. We say the open Petri net goes from X to Y. In our paper, we show how to treat it as a morphism in a category we call

.

Given an open Petri net with rate constants assigned to each reaction, our paper explains how to get its ‘open rate equation’. It’s just the usual rate equation with extra terms describing inflows and outflows. The above example has this open rate equation:

Here are arbitrary smooth functions describing outflows as a function of time.

Given another open Petri net for example this:

it will have its own open rate equation, in this case

Here are arbitrary smooth functions describing inflows as a function of time. Now for a tiny bit of category theory: we can compose

and

by gluing the outputs of

to the inputs of

This gives a new open Petri net

as follows:

But this open Petri net has an empty set of inputs, and an empty set of outputs! So it amounts to an ordinary Petri net, and its open rate equation is a rate equation of the usual kind. Indeed, this is the Petri net we have already seen.

As it turns out, there’s a systematic procedure for combining the open rate equations for two open Petri nets to obtain that of their composite. In the example we’re looking at, we just identify the outflows of with the inflows of

(setting

and

) and then add the right hand sides of their open rate equations.

The first goal of our paper is to precisely describe this procedure, and to prove that it defines a functor

from to a category

where the morphisms are ‘open dynamical systems’. By a dynamical system, we essentially mean a vector field on

which can be used to define a system of first-order ordinary differential equations in

variables. An example is the rate equation of a Petri net. An open dynamical system allows for the possibility of extra terms that are arbitrary functions of time, such as the inflows and outflows in an open rate equation.

In fact, we prove that and

are symmetric monoidal categories and that

is a symmetric monoidal functor. To do this, we use Brendan Fong’s theory of ‘decorated cospans’.

Decorated cospans are a powerful general tool for describing open systems. A cospan in any category is just a diagram like this:

We are mostly interested in cospans in the category of finite sets and functions between these. The set

, the so-called apex of the cospan, is the set of states of an open system. The sets

and

are the inputs and outputs of this system. The legs of the cospan, meaning the morphisms

and

describe how these inputs and outputs are included in the system. In our application,

is the set of species of a Petri net.

For example, we may take this reaction network:

treat it as a Petri net with :

and then turn that into an open Petri net by choosing any finite sets and maps

,

, for example like this:

(Notice that the maps including the inputs and outputs into the states of the system need not be one-to-one. This is technically useful, but it introduces some subtleties that I don’t feel like explaining right now.)

An open Petri net can thus be seen as a cospan of finite sets whose apex is ‘decorated’ with some extra information, namely a Petri net with

as its set of species. Fong’s theory of decorated cospans lets us define a category with open Petri nets as morphisms, with composition given by gluing the outputs of one open Petri net to the inputs of another.

We call the functor

gray-boxing because it hides some but not all the internal details of an open Petri net. (In the paper we draw it as a gray box, but that’s too hard here!)

We can go further and black-box an open dynamical system. This amounts to recording only the relation between input and output variables that must hold in steady state. We prove that black-boxing gives a functor

(yeah, the box here should be black, and in our paper it is). Here is a category where the morphisms are semi-algebraic relations between real vector spaces, meaning relations defined by polynomials and inequalities. This relies on the fact that our dynamical systems involve algebraic vector fields, meaning those whose components are polynomials; more general dynamical systems would give more general relations.

That semi-algebraic relations are closed under composition is a nontrivial fact, a spinoff of the Tarski–Seidenberg theorem. This says that a subset of defined by polynomial equations and inequalities can be projected down onto

, and the resulting set is still definable in terms of polynomial identities and inequalities. This wouldn’t be true if we didn’t allow inequalities. It’s neat to see this theorem, important in mathematical logic, showing up in chemistry!

Structure of the paper

Okay, now you’re ready to read our paper! Here’s how it goes:

In Section 2 we review and compare reaction networks and Petri nets. In Section 3 we construct a symmetric monoidal category where an object is a finite set and a morphism is an open reaction network (or more precisely, an isomorphism class of open reaction networks). In Section 4 we enhance this construction to define a symmetric monoidal category

where the transitions of the open reaction networks are equipped with rate constants. In Section 5 we explain the open dynamical system associated to an open reaction network, and in Section 6 we construct a symmetric monoidal category

of open dynamical systems. In Section 7 we construct the gray-boxing functor

In Section 8 we construct the black-boxing functor

We show both of these are symmetric monoidal functors.

Finally, in Section 9 we fit our results into a larger ‘network of network theories’. This is where various results in various papers I’ve been writing in the last few years start assembling to form a big picture! But this picture needs to grow….

I’m a big fan of suggestive notation, and RxNet is definitely now on my list of good examples!

As a neuroscientist, one of my interests is in how chemicals get from one part of a cell to the other, all while undergoing reactions at any locus in space. One could model this by chaining the local Petri nets, which leads me to wonder whether this device can be used infinitesimally (by extending to an infinite graph) to generate and analyze partial differential equations.

After years of reading about and toying with category theory, am I incorrect in thinking that there should be fairly simple constructs for these purposes?

The answer is roughly yes.

Petri nets as described already allow one to label chemicals by their location as well as their kind, and introduce ‘reactions’ that describe the transport (e.g. diffusion) of chemicals from one location to another, in addition to the usual reactions that change chemicals from one kind to another. The only limitation of the standard Petri net setup, as you hint, is that it involves just finitely many species, so one needs to discretize the set of possible locations.

But as you note, we can generalize Petri nets to allow for an infinite set of species. Doing this right is nontrivial, because if we use different species to indicate different locations in space, one also wants to replace sums over species by integrals… and if you’re a mathematician, you have to ask whether these integrals converge. Going further, you’d want to see if all the interesting theorems about chemical reaction networks, like the Deficiency Zero Theorem, generalize to this new context.

of species. Doing this right is nontrivial, because if we use different species to indicate different locations in space, one also wants to replace sums over species by integrals… and if you’re a mathematician, you have to ask whether these integrals converge. Going further, you’d want to see if all the interesting theorems about chemical reaction networks, like the Deficiency Zero Theorem, generalize to this new context.

In short: a hand-wavy generalization is easy, but a serious treatment requires some work. Luckily, there are a lot of mathematicians called analysts who specialize in exactly this sort of work. But I don’t think most of it has been done yet.

In the meantime one can resort to a discretization of space, to keep the number of species finite. And this is just fine for numerical work.

Thanks! I’m curious as to why algebra and analysis have resisted unification (unlike say, algebra and topology), but I suspect that’s way beyond addressable here.

That’s a very interesting question, which many people have thought about.

One answer people sometimes give is that analysis makes heavy use of alternating universal and existential quantifiers (“for every there exists a

there exists a  such that for all

such that for all  …”), while algebra tends to avoid these, making the reasoning “cleaner”. However, this neglects the fact that universal properties involve strings of alternating universal and existential quantifiers.

…”), while algebra tends to avoid these, making the reasoning “cleaner”. However, this neglects the fact that universal properties involve strings of alternating universal and existential quantifiers.

Another answer is more historical: category theory first arose at the borderline of algebra and topology when people realized they were using functors to reduce topology problems to algebra problems, and it hasn’t percolated into analysis as deeply.

I suspect the real answer will only be known when we do better unify analysis and other branches of math; this may require new ideas that we don’t have yet… and not having these ideas is what’s holding us back.

Referencing the latest arXiv with Coya and Rebro, do you have an idea about how to implement the relation for a memristor (should be easier than other non-affine elements)? The only way I could think of would double the number of variables to .

.

I’ll eventually post a blog article about our new paper, and I’d rather have this conversation there. But your approach to handling memristors sounds good to me.

That would be better. Later then.

I just want to remark regarding that “invention” of Canedo et al: https://scholar.google.com/citations?view_op=view_citation&hl=en&user=r2zo9HQAAAAJ&sortby=pubdate&citation_for_view=r2zo9HQAAAAJ:D03iK_w7-QYC

that constant monitoring of workers like via the use of RFID or video etc. eg. in manufacturing plants is (so far) forbidden in Germany: http://lexetius.com/2008,2340 and thus as far as I know technological set-ups like the “invention” had to be implemented differently than planned, because trade unions were interfering. In particular as far as I know such a “patent” is (so far) impossible in Europe.

It might also be worthwile in this context to check out some old (post-)Fordism patents from the last century.

Here’s a bit more about how our paper connects to other ideas in chemistry, written in response to a chemist’s questions:

There’s a tradition of studying chemical reactions in which concentrations of chemicals of obey a set of differential equations called the ‘rate equation’, based on the law of mass action: the rate of a chemical reaction is proportional to the concentrations of the reacting substances.

In real life all chemical reactions are reversible and a closed system tends to settle down into an equilibrium obeying detailed balance: the rate of any reaction is equal to that of the reverse reaction. However, in some cases the rate of some reverse reactions are so slow that it’s enlightening to consider what happens when they’re zero. Then we can have interesting phenomena, like solutions of the rate equation where the concentrations of chemicals are periodic as a function of time, or even chaotic. A famous example is the Belousov-Zhabotinsky reaction.

But the Belousov-Zhabotinsky reaction actually involves about 18 reactions, so it’s interesting to look for simpler model systems that display similar behavior. A famous one is the Brusselator. The reactions here are not reversible (they’re shown in the Wikipedia article I linked to), and the analysis is simplest when 2 of the 5 chemicals involved are treated as having concentrations so much higher than the rest that we can approximate these concentrations as fixed… even though these chemicals are getting used up.

This sort of thing led mathematical chemists to study chemical reaction networks where not all reactions are reversible and some concentrations are held fixed, or chemostatted.

The big revolution in chemical reaction network theory came in the 1960s, with the work of Aris, Feinberg, Jackson, Horn and others. They proved many theorems about the behavior of the rate equation for different classes of chemical reaction networks: existence, uniqueness or nonuniqueness of equilibrium solutions, periodic solutions, etc.

Around the 1970s, Prigogine focused attention on the nonequilibrium thermodynamics of ‘open’ systems, where for example chemicals are constantly being added and/or removed from a system. Then we can have ‘nonequilibrium steady states’, where there’s no change in chemical concentrations, but nonzero net flows: that is, a lack of detailed balance. Sometimes there exists a unique nonequilibrium steady state that is stable, but sometimes it becomes unstable and one sees oscillatory behavior. Prigogine developed a formalism to study this.

My work with Blake Pollard formalizes the notion of ‘open chemical reaction network’. We describe the processes that let you build a big open chemical reaction network from smaller pieces. We describe the laws obeyed by these ‘building up’ processes. We describe the ‘open rate equation’, a generalization of the rate equation for open chemical reaction networks. We describe how the open rate equation of a big open chemical reaction network is built up from the open rate equations of the pieces. We also describe how nonequilibrium steady states of a big open chemical reaction network are built up from nonequilibrium steady states of the pieces. The right language for describing all this is category theory, since that provides techniques for studying how open systems can be connected end-to-end (‘composition’) or in parallel (‘tensoring’).

The algebra you use is very reminiscent of category theory applied to information dissemination in social influence matrices, tracing outflows and reception variables as a dynamic system with feedback loops incorporating filters and condition either or, and if type statements. For me, the organic chemistry of endocrine cascades and the changeability of hormone signaling at the level of extremely rarified molarities, very much follows the types of outlines you’ve detailed. You have the what and how, but the bitter ontologies of why are always the difficult proofs of causality. Chemical signaling at the level of cellular receptors do seem to work as Operators with all the subtleties of a higher order linear algebra.

I had myself some thoughts about the mathematics of chemical reactions. My bases was to note that the set of substances with the a binary operation is a “commutative idempotent magma” (CI-magmas), for obvious reasons. Reaction conditions are not included in this picture, so one way to introduce them would be some “homomorphism” taking care for that. I dunno if much results from that (the lit. on CI-magmas as far as I can see is sparse) but anyway I think this is at least a concise picture of the structure of the beast. Got some take on that?

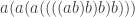

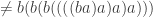

A common approach these days is to use a strict symmetric monoidal category where different substances (usually called ‘species’) are objects, the tensor product is denoted and a typical morphism would a reaction like this:

and a typical morphism would a reaction like this:

This is a tiny bit like a commutative idempotent magma, but different. You can read more in Section 25.2 here:

• John Baez and Jacob Biamonte Quantum Techniques for Stochastic Mechanics, World Scientific Press, Singapore, 2018. (Draft available

on the arXiv.)

Interesting, thank you very much!

The Petri nets are familiar to the Chemist, though maybe not necessarily under that name :-). I see that you arrived at that by what we would call “reaction kinetic” considerations. When I started to think about it I had a different approach: just regard the thermodynamic equilibria.

So the basic question is about the types of the products and not how long it takes to get them. That would be something like a “reaction mechanism network”. (A side note here: what you write about the term “species” in Chemistry is entirely true, but there is another thing that can be mentioned, chemists are well trained to strictly separate between “particle/molecule,atom” and “compound” hierarchy. There is usually a set of terms which forms a partition with this respect and “species” strictly speaking belongs to the particle/molecule subset). So I thought about compounds (anything what you can put into any type of container) as the elements of the set . And the binary operation (lets write it multiplicative for the moment) being a perfect mixture followed by infinite time. Then reactions read for example like

. And the binary operation (lets write it multiplicative for the moment) being a perfect mixture followed by infinite time. Then reactions read for example like  . Its clear that

. Its clear that  , you mix the compound with itself and nothing happens. (The nice feature of multiplicativity here I find is that total amounts do not appear, only concentrations.) Also

, you mix the compound with itself and nothing happens. (The nice feature of multiplicativity here I find is that total amounts do not appear, only concentrations.) Also  is clear. But except closed-ness, there is nothing more. So my conclusion was its a CI-magma. What is missing are different reaction conditions at which we wait for the thermodynamic equilibrium. So I thought you get that by a set of indexed morphisms, the indices are the reaction conditions, I think its basically temperature and pressure, maybe some EM radiation. This morphism conserves idempotency and commutativity (besides closedness), so I thought one might call it “homomorphism” (but I am not sure).

is clear. But except closed-ness, there is nothing more. So my conclusion was its a CI-magma. What is missing are different reaction conditions at which we wait for the thermodynamic equilibrium. So I thought you get that by a set of indexed morphisms, the indices are the reaction conditions, I think its basically temperature and pressure, maybe some EM radiation. This morphism conserves idempotency and commutativity (besides closedness), so I thought one might call it “homomorphism” (but I am not sure).

An interesting feature of this formalization is, that one can for example model that there is a difference between pouring into

into  and

and  into

into  , which seems to contradict commuativity at first glance, but thats not true: pouring a into b means essentially starting with

, which seems to contradict commuativity at first glance, but thats not true: pouring a into b means essentially starting with  and going via

and going via  and

and  to

to  , which is

, which is  in a CI-magma. So in that approach “kinetics” would enter from an more algebraic side the buisnes.

in a CI-magma. So in that approach “kinetics” would enter from an more algebraic side the buisnes.

Would it make sense to you?

As part of the Applied Category Theory 2018 school, Maru Sarazola wrote a blog article on open dynamical systems and their steady states. Check it out:

• Maru Sarazola, Dynamical systems and their steady states, 2 April 2018.

She compares two papers:

• David Spivak, The steady states of coupled dynamical systems compose according to matrix arithmetic.

• John Baez and Blake Pollard, A compositional framework for reaction networks, Reviews in Mathematical Physics 29 (2017), 1750028.

(Blog article here.)

My student Kenny Courser‘s thesis has hit the arXiv:

• Kenny Courser, Open Systems: A Double Categorical Perspective, Ph.D. thesis, U. C. Riverside, 2020.