Last time I ended with a formula for the ‘Gibbs distribution’: the probability distribution that maximizes entropy subject to constraints on the expected values of some observables.

This formula is well-known, but I’d like to derive it here. My argument won’t be up to the highest standards of rigor: I’ll do a bunch of computations, and it would take more work to state conditions under which these computations are justified. But even a nonrigorous approach is worthwhile, since the computations will give us more than the mere formula for the Gibbs distribution.

I’ll start by reminding you of what I claimed last time. I’ll state it in a way that removes all unnecessary distractions, so go back to Part 20 if you want more explanation.

The Gibbs distribution

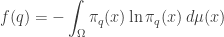

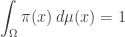

Take a measure space  with measure

with measure  Suppose there is a probability distribution

Suppose there is a probability distribution  on

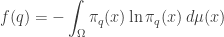

on  that maximizes the entropy

that maximizes the entropy

subject to the requirement that some integrable functions  on

on  have expected values equal to some chosen list of numbers

have expected values equal to some chosen list of numbers

(Unlike last time, now I’m writing  and

and  with superscripts rather than subscripts, because I’ll be using the Einstein summation convention: I’ll sum over any repeated index that appears once as a a superscript and once as a subscript.)

with superscripts rather than subscripts, because I’ll be using the Einstein summation convention: I’ll sum over any repeated index that appears once as a a superscript and once as a subscript.)

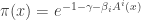

Furthermore, suppose  depends smoothly on

depends smoothly on  I’ll call it

I’ll call it  to indicate its dependence on

to indicate its dependence on  Then, I claim

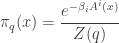

Then, I claim  is the so-called Gibbs distribution

is the so-called Gibbs distribution

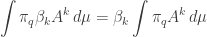

where

and

is the entropy of

Let’s show this is true!

Finding the Gibbs distribution

So, we are trying to find a probability distribution  that maximizes entropy subject to these constraints:

that maximizes entropy subject to these constraints:

We can solve this problem using Lagrange multipliers. We need one Lagrange multiplier, say  for each of the above constraints. But it’s easiest if we start by letting

for each of the above constraints. But it’s easiest if we start by letting  range over all of

range over all of  that is, the space of all integrable functions on

that is, the space of all integrable functions on  Then, because we want

Then, because we want  to be a probability distribution, we need to impose one extra constraint

to be a probability distribution, we need to impose one extra constraint

To do this we need an extra Lagrange multiplier, say

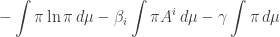

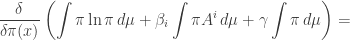

So, that’s what we’ll do! We’ll look for critical points of this function on

Here I’m using some tricks to keep things short. First, I’m dropping the dummy variable x which appeared in all of the integrals we had: I’m leaving it implicit. Second, all my integrals are over  so I won’t say that. And third, I’m using the Einstein summation convention, so there’s a sum over i implicit here.

so I won’t say that. And third, I’m using the Einstein summation convention, so there’s a sum over i implicit here.

Okay, now let’s do the variational derivative required to find a critical point of this function. When I was a math major taking physics classes, the way physicists did variational derivatives seemed like black magic to me. Then I spent months reading how mathematicians rigorously justified these techniques. I don’t feel like a massive digression into this right now, so I’ll just do the calculations—and if they seem like black magic, I’m sorry!

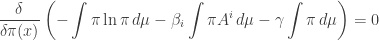

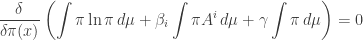

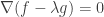

We need to find  obeying

obeying

or in other words

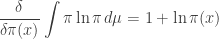

First we need to simplify this expression. The only part that takes any work, if you know how to do variational derivatives, is the first term. Since the derivative of  is

is  we have

we have

The second and third terms are easy, so we get

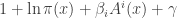

Thus, we need to solve this equation:

That’s easy to do:

Good! It’s starting to look like the Gibbs distribution!

We now need to choose the Lagrange multipliers  and

and  to make the constraints hold. To satisfy this constraint

to make the constraints hold. To satisfy this constraint

we must choose  so that

so that

or in other words

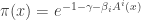

Plugging this into our earlier formula

we get this:

Great! Even more like the Gibbs distribution!

By the way, you must have noticed the “1” that showed up here:

It buzzed around like an annoying fly in the otherwise beautiful calculation, but eventually went away. This is the same irksome “1” that showed up in Part 19. Someday I’d like to say a bit more about it.

Now, where were we? We were trying to show that

minimizes entropy subject to our constraints. So far we’ve shown

is a critical point. It’s clear that

so  really is a probability distribution. We should show it actually maximizes entropy subject to our constraints, but I will skip that. Given that,

really is a probability distribution. We should show it actually maximizes entropy subject to our constraints, but I will skip that. Given that,  will be our claimed Gibbs distribution

will be our claimed Gibbs distribution  if we can show

if we can show

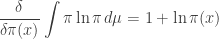

This is interesting! It’s saying our Lagrange multipliers  actually equal the so-called conjugate variables

actually equal the so-called conjugate variables  given by

given by

where  is the entropy of

is the entropy of

There are two ways to show this: the easy way and the hard way. The easy way is to reflect on the meaning of Lagrange multipliers, and I’ll sketch that way first. The hard way is to use brute force: just compute  and show it equals

and show it equals  This is a good test of our computational muscle—but more importantly, it will help us discover some interesting facts about the Gibbs distribution.

This is a good test of our computational muscle—but more importantly, it will help us discover some interesting facts about the Gibbs distribution.

The easy way

Consider a simple Lagrange multiplier problem where you’re trying to find a critical point of a smooth function

subject to the constraint

for some smooth function

and constant c. (The function f here has nothing to do with the f in the previous sections.) To answer this we introduce a Lagrange multiplier  and seek points where

and seek points where

This works because the above equation says

Geometrically this means we’re at a point where the gradient of  points at right angles to the level surface of

points at right angles to the level surface of

Thus, to first order we can’t change  by moving along the level surface of

by moving along the level surface of

But also, if we start at a point where

and we begin moving in any direction, the function  will change at a rate equal to

will change at a rate equal to  times the rate of change of

times the rate of change of  . That’s just what the equation says! And this fact gives a conceptual meaning to the Lagrange multiplier

. That’s just what the equation says! And this fact gives a conceptual meaning to the Lagrange multiplier

Our situation is more complicated, since our functions are defined on the infinite-dimensional space  and we have an n-tuple of constraints with an n-tuple of Lagrange multipliers. But the same principle holds.

and we have an n-tuple of constraints with an n-tuple of Lagrange multipliers. But the same principle holds.

So, when we are at a solution  of our constrained entropy-maximization problem, and we start moving the point

of our constrained entropy-maximization problem, and we start moving the point  by changing the value of the ith constraint, namely

by changing the value of the ith constraint, namely  the rate at which the entropy changes will be

the rate at which the entropy changes will be  times the rate of change of

times the rate of change of  So, we have

So, we have

But this is just what we needed to show!

The hard way

Here’s another way to show

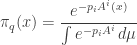

We start by solving our constrained entropy-maximization problem using Lagrange multipliers. As already shown, we get

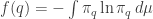

Then we’ll compute the entropy

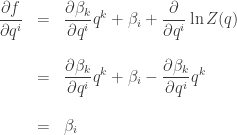

Then we’ll differentiate this with respect to  and show we get

and show we get

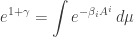

Let’s try it! The calculation is a bit heavy, so let’s write  for the so-called partition function

for the so-called partition function

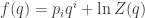

so that

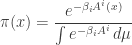

and the entropy is

This is the sum of two terms. The first term

is  times the expected value of

times the expected value of  with respect to the probability distribution

with respect to the probability distribution  all summed over

all summed over  But the expected value of

But the expected value of  is

is  so we get

so we get

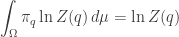

The second term is easier:

since  integrates to 1 and the partition function

integrates to 1 and the partition function  doesn’t depend on

doesn’t depend on

Putting together these two terms we get an interesting formula for the entropy:

This formula is one reason this brute-force approach is actually worthwhile! I’ll say more about it later.

But for now, let’s use this formula to show what we’re trying to show, namely

For starters,

where we played a little Kronecker delta game with the second term.

Now we just need to compute the third term:

Ah, you don’t know how good it feels, after years of category theory, to be doing calculations like this again!

Now we can finish the job we started:

Voilà!

Conclusions

We’ve learned the formula for the probability distribution that maximizes entropy subject to some constraints on the expected values of observables. But more importantly, we’ve seen that the anonymous Lagrange multipliers  that show up in this problem are actually the partial derivatives of entropy! They equal

that show up in this problem are actually the partial derivatives of entropy! They equal

Thus, they are rich in meaning. From what we’ve seen earlier, they are ‘surprisals’. They are analogous to momentum in classical mechanics and have the meaning of intensive variables in thermodynamics:

|

Classical Mechanics |

Thermodynamics |

Probability Theory |

| q |

position |

extensive variables |

probabilities |

| p |

momentum |

intensive variables |

surprisals |

| S |

action |

entropy |

Shannon entropy |

Furthermore, by showing  the hard way we discovered an interesting fact. There’s a relation between the entropy and the logarithm of the partition function:

the hard way we discovered an interesting fact. There’s a relation between the entropy and the logarithm of the partition function:

(We proved this formula with  replacing

replacing  but now we know those are equal.)

but now we know those are equal.)

This formula suggests that the logarithm of the partition function is important—and it is! It’s closely related to the concept of free energy—even though ‘energy’, free or otherwise, doesn’t show up at the level of generality we’re working at now.

This formula should also remind you of the tautological 1-form on the cotangent bundle  namely

namely

It should remind you even more of the contact 1-form on the contact manifold  namely

namely

Here  is a coordinate on the contact manifold that’s a kind of abstract stand-in for our entropy function

is a coordinate on the contact manifold that’s a kind of abstract stand-in for our entropy function

So, it’s clear there’s a lot more to say: we’re seeing hints of things here and there, but not yet the full picture.

For all my old posts on information geometry, go here:

• Information geometry.

Posted by John Baez

Posted by John Baez